Hearing Wrap Up: Broad and Overreaching Reporting Requirements in the White House AI EO will Stifle U.S. Innovation

WASHINGTON—The Subcommittee on Cybersecurity, Information Technology, and Government Innovation held a hearing titled, “White House Overreach on AI.” Subcommittee members discussed how the Biden Administration’s executive order (EO) on AI will hamstring U.S. innovation and leadership. Subcommittee members highlighted provisions in the EO that lack statutory authority, undermine the role of Congress, and establish heavy-handed industry controls.

Key Takeaways:

President Biden’s EO on artificial intelligence is a clear example of executive overreach and threatens to hinder American leadership in AI development.

- Jennifer Huddleston—Technology Policy Research Fellow at the Cato Institute—spoke on the regulatory dangers within the Biden EO that would harm U.S. leadership in AI: “The United States has a chance to distinguish itself from more regulatory approaches once again and embrace an approach that allows entrepreneurs and consumers to use technology to find creative solutions to problems and needs. The recent AI executive order, however, signals a more regulatory approach to this new technology.”

- Adam Thierer—Resident Senior Fellow on Technology and Innovation at the R Street Institute—discussed the ramifications of the Biden Administration’s EO and explained why the U.S. should have an innovation-oriented framework on AI: “First, the United States needs a national pro-innovation policy framework for artificial intelligence (AI) that builds on the winning bipartisan vision Congress crafted for the internet and digital technology in the 1990s. Second, if we fail to get this AI policy framework right, it will have profound consequences for America’s global competitive advantage and geopolitical security. Third, the Biden administration’s recent AI Executive Order is no substitute for this needed national policy framework and, worse yet, it could undermine that objective.”

The Biden EO inappropriately invokes the war-time emergency powers of the Defense Production Act (DPA) to compel information from private companies which would cripple U.S. development and advancement of AI.

- Neil Chilson—Head of AI Policy at the Abundance Institute—warned the misuse the DPA in the EO will harm AI developers in the U.S.: “The Biden administration is not using the DPA to spur the production of AI or computing facilities for government use and to support national security. Instead, the EO deploys the DPA to demand highly confidential documents from companies that may not even have a contractual relationship with the government and to drive the adoption of government-established quality control measures for commercially available products to be used by normal Americans and businesses.”

Member Highlights:

Subcommittee on Cybersecurity, Information Technology, and Government Innovation Chairwoman Nancy Mace (R-S.C.) discussed the harmful reporting requirements in Biden’s EO and the consequences they would have.

Rep. Mace: “My first question is the Commerce Department could not protect Secretary Raimondo’s own email account from being hacked last year. Yet, this EO requires firms to share with the agency on a daily basis the crown jewel secrets of the most powerful AI systems on earth. First question, can we trust Commerce to ensure this highly sensitive data doesn’t fall into the hands of China or another foreign adversary?”

Mr. Thierer: “No.”

Ms. Huddleston: “I think we’ve seen that there is a greater need for discussion in improving cybersecurity in the government and beyond.”

Mr. Chilson: “No, and we shouldn’t have to.”

Rep. Mace: “Should AI model developers have to give the government all of their test results and test data, even those concerning politics and religion?

Mr. Thierer: “No.”

Ms. Huddleston: “I think it’s a highly concerning proposal with significant consequences for innovation.”

Mr. Chilson: “No.”

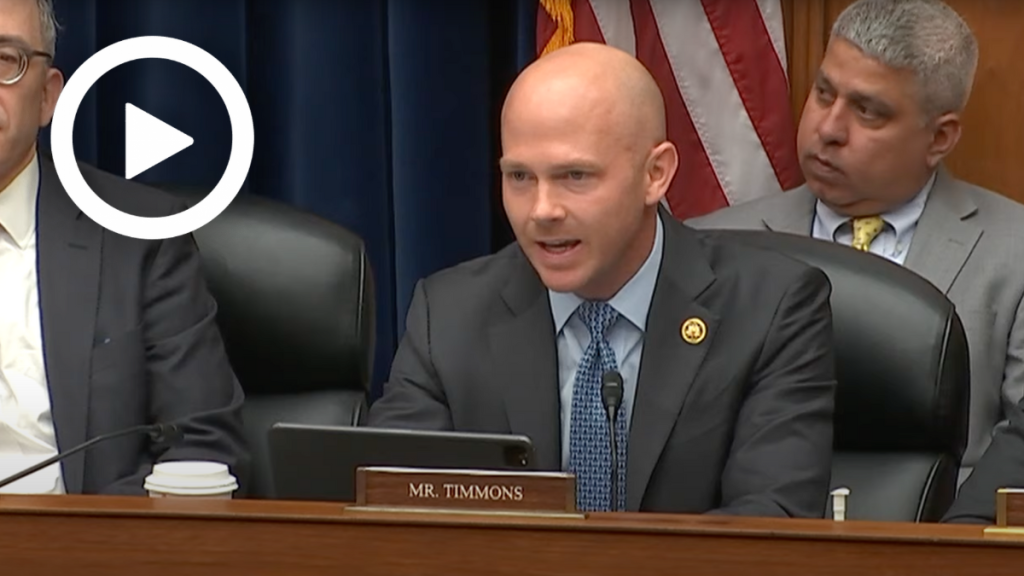

Rep. William Timmons (R-S.C.) highlighted the impact of the EO reporting requirements on U.S. innovation.

Rep. Timmons: “So all of the reporting requirements, does that not chill innovation? There’s a lot of proprietary approaches to this, is it going to chill innovation?”

Mr. Chilson: “ I think it could chill innovation in a couple different ways. One, companies when they are thinking about what sensitive data they are going to have to report to the government, they’re going to have to make a trade off. Are we going to grow big enough to meet the caps that put us over this reporting threshold or are we going to stay under that or are we going to limit ourselves artificially in order to not have to comply with these specific rules. And I think that would be to the detriment of U.S. leadership in AI.”

Rep. Tim Burchett (R-Tenn.) discussed the inappropriate use of the Defense Production Act to regulate private industry.

Rep. Burchett: “Do you think using the Defense Production Act (DPA) to regulate artificial intelligence is a bit of an overreach and would Congress be better suited to regulate artificial intelligence.”

Mr. Thierer: “Yes Congressman, I think that’s right. The authority begins here to decide what the Defense Production Act should do and now I think now we are witnessing pretty excessive overreach from the statute.”

Rep. Burchett: “Do you all think that this executive order could stifle artificial intelligence innovation?”

Mr. Chilson: “I do and I think the use of the DPA here undermines some of the other important goals that Congresswoman Pressley was pointing out about government uses and the risks of government uses of AI.”

CLICK HERE to watch the hearing.