Hearing Wrap Up: Filling Legal Gaps and Bolstering Enforcement Can Help Combat Harmful Deepfakes

WASHINGTON—The Subcommittee on Cybersecurity, Information Technology, and Government Innovation held a hearing titled, “Addressing Real Harm Done by Deepfakes.” Subcommittee members discussed how the nonconsensual distribution of AI-enhanced intimate images and child sexual abuse material (CSAM) is proliferating on the internet and social media sites, overwhelmingly victimizing women and children. Subcommittee members emphasized the need to plug gaps in existing federal and state laws, which are generally aimed at pornographic images and video of actual people – not realistic looking digital forgeries of identifiable individuals.

Key Takeaways:

Recent advances in deepfake technology make it much easier to create and disseminate sophisticated fake images, video and audio that appear real and are increasingly proliferating on the internet and social media, where they can be used to cause harm to individuals.

- Dorata Mani—A parent of a daughter victimized by deepfake pornography—described the impact the incident had and shared how both she and her daughter are now advocating for prevention mechanisms and greater accountability for perpetrators: “It was confirmed that my daughter was one of several victims involved in the creation and distribution of AI Deep Fake Nudes by her classmates. This event left her feeling helpless and powerless, intensified by the lack of accountability for the boys involved and the absence of protective laws… Since that day, my daughter and I have been tirelessly advocating for the establishment of AI laws, the implementation of AI school policies, and the promotion of education regarding AI.”

This harm often impacts individual women and children, who may be targeted via deepfake images of adult pornography or child sexual abuse material (CSAM).

- John Shehan—Vice President of the Exploited Children Division at the National Center for Missing and Exploited Children—expanded on the increased prevalence of CSAM materials due to AI:“GAI (generative artificial intelligence) technology is expanding and growing more sophisticated and accessible at an incredibly fast rate, making it crucial for Congress and child-serving professionals to closely monitor the implications on child safety and to address legislative/regulatory gaps and needed mandatory best practices. We look forward to working with members of this Subcommittee and all of our Congressional partners to ensure that child safety is prioritized when considering dangers inherent to offenders’ use of GAI technology and potential legislative remedies.”

The rapid growth of deepfakes is creating new challenges for parents and for law enforcement entities at the federal, state and local level. In many instances, existing criminal and civil statutes concerning pornography and CSAM require updating to clearly address deepfake media.

- Carl Szabo—Vice President and General Counsel at NetChoice—highlighted a feasible way for policymakers to approach solutions to deepfakes: “By doubling down on enforcement, fostering public-private collaboration, and judiciously updating laws to cover unique AI harms, policymakers can effectively combat malicious deepfakes without compromising the technology’s vast beneficial potential.”

Member Highlights:

Subcommittee Chairwoman Nancy Mace (R-S.C.) discussed with the mother of a victim of deepfake porn the impact that the experience has had on her family.

Rep. Mace: “My first question to you and in your written testimony, you talk about what was done to your daughter, you say this event left her feeling helpless and powerless. As a mom, can you talk to us a little bit about what this has done to your family?”

Ms. Mani: “The moment when Francesca was informed by her counselor that she was one of the AI victims, she did feel helpless and powerless. And then she went out from the office and she noticed this group of boys making fun of a group of girls that were very emotional in the hallway. In that second, she turned from sad to mad. now because of you [Subcommittee Members], all of you, we feel very empowered because I think you guys are listening.”

Rep. William Timmons (R-S.C.) spoke on the need to fill in legal gaps to provide stricter enforcement against deepfakes.

Rep. Timmons: “Mr. Szabo, you said that we don’t really need a lot of new laws and Dr. Waldman, you’ve taken a pretty different stance on that. I mean, it seems to me that there are indeed holes and there’s gaps whether it’s revenge porn, non-consensual porn, child porn. I think Mr. Szabo, you would agree that we do need to address that. There’s just a lot of gray area surrounding causes of action and ways to be made whole whether it’s using civil law to extract financial benefits or criminal law in certain circumstances, but you would agree that we do need to address the holes as it relates to those area?”

Mr. Szabo: “One hundred percent. you have laws like FCRA, HIPAA, all these laws, Rohit Chopra is the director of the CFPB. He and I probably agree on not much. Even he recognizes that you cannot hide behind a computer because existing laws apply. But here we do have gaps that do need to be filled.”

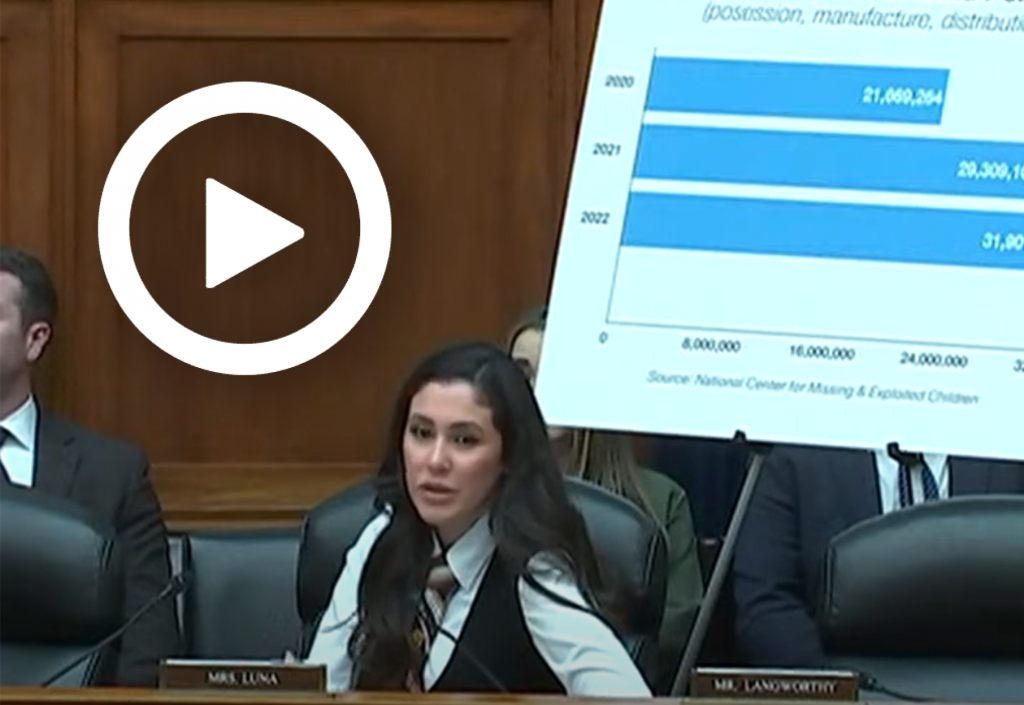

Rep. Anna Paulina Luna (Fla.) broke down the global scale of deepfakes and the proliferation of child sex abuse materials.

Rep. Luna: “Question is for Mr. John Shehan. You previously stated in a report that CSAM online platforms grew from 32 million in 2022 to 36 million in 2023. What factors do you think have contributed to this trend?”

Mr. Shehan: “That’s an excellent question and much of it is around just the global scale and ability to create and disseminate child sexual abuse material. This is truly a global issue. The 36 million reports last year involved more than 90% outside the United States. Individuals using US servers, but we’re also seeing a massive increase in the number of reports that we’re receiving regarding the enticement of children for sexual acts. Your chart there, you know, in 2021, we had about 80,000 reports regarding the online enticement of children. Last year, it jumped up to 180,000 reports. Not even through the first quarter of this year, we’re already over 100,000 reports regarding the enticement of children. Many of these cases are involving generative AI, others or financial sextortion.”