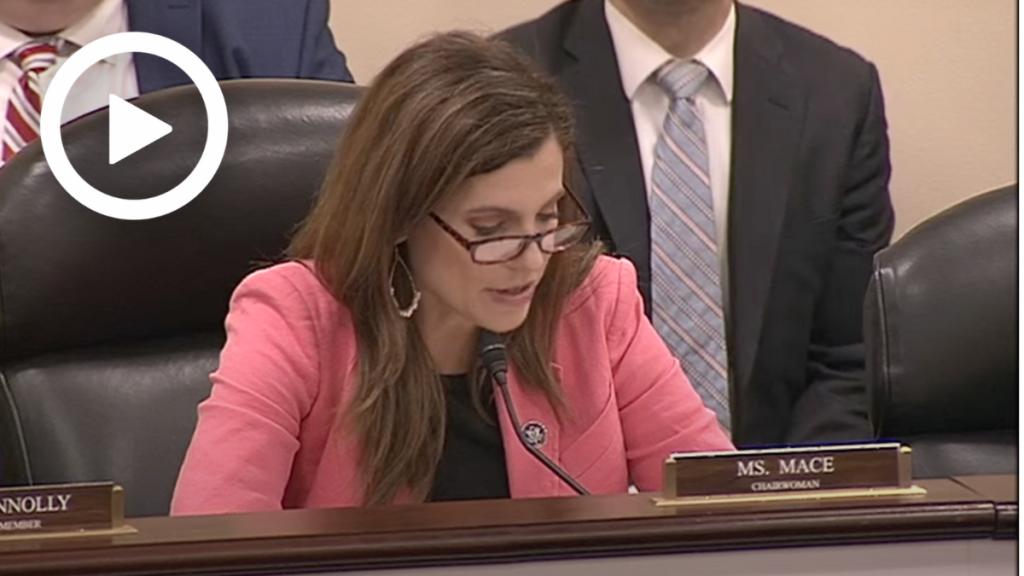

Mace: Federal Government Must be Ready for Widespread Impacts of AI Integration

WASHINGTON – Subcommittee on Cybersecurity, Information Technology, and

Government Innovation Chairwoman Nancy Mace (R-S.C.) delivered opening remarks at a hearing titled “How are Federal Agencies Harnessing Artificial Intelligence?.” In her opening statement, Subcommittee Chairwoman Mace emphasized that as the nation’s largest employer, the federal government must be ready for the widespread impacts of AI. She continued by warning that like any tool, AI can be abused when used for the wrong purposes or without proper safeguards. She finished by highlighting her development of legislation to ensure federal agencies employ AI systems effectively, safely, and transparently.

Below are Subcommittee Chairwoman Mace’s remarks as prepared for delivery.

Good afternoon, and welcome to this hearing of the Subcommittee on Cybersecurity, Information Technology, and Government Innovation.

At the very first hearing this subcommittee held this year, expert witnesses told us artificial intelligence is likely to bring disruptive innovation to many fields. AI should instigate economic growth, higher standards of living and improved medical outcomes. Virtually every industry and institution will feel its impact.

Today, we’ll discuss the impact of AI on the largest, most powerful institution in the Nation—the federal government.

As we know, the government today performs an ever-expanding swath of activities – from securing the homeland to predicting the weather to cutting benefits checks. Many of these functions could be greatly impacted by AI. That’s clear from the hundreds of current and potential AI use cases posted publicly by federal agencies, pursuant to an Executive Order issued under the last Administration.

Federal agencies are attempting to use AI systems to enhance border security, to make air travel safer, and to speed up eligibility determinations for Social Security disability benefits —just to name a few use cases.

AI will also shake up the federal workforce itself. We hear a lot about how AI could disrupt the private sector workforce—transforming or eliminating some jobs, while creating others. Well, the federal government is the Nation’s largest employer. And many of those employees work in white-collar occupations AI is already reshaping, because it can perform many routine tasks more efficiently than humans. That will allow federal employees to focus on higher-order work that maximizes their productivity.

In fact, a Deloitte study estimated that the use of AI to automate tasks of federal employees could eventually yield as much as $41 billion in annual savings by reducing required labor hours. A separate study by the Partnership for Public Service and the IBM Center for the Business of Government identified 130,000 federal employee positions whose work would likely be impacted by AI—including 20,000 IRS tax examiners and agents.

That of course begs the question whether we need to hire tens of thousands of new IRS employees when AI could transform or even replace the work of much of its current staff.

AI can make government work better. But it’s still just a tool—albeit an incredibly powerful one. And like any tool, it can easily be abused when used for the wrong purposes — or without proper safeguards.

AI systems are often fueled by massive troves of training data that flow through complex algorithms. These algorithms can yield results their own designers are unable to predict — and struggle to explain.

So, it’s important we have safeguards to prevent the federal government from exercising inappropriate bias. We also need to ensure that the federal government’s use of AI does not intrude on the privacy rights of its own citizens.

Bottom line is we need the government to harness AI to improve its operations, while safeguarding against these potential hazards.

That’s why Congress enacted the AI in Government Act in late December 2020, soon before the current Administration took office. That law requires the Office of Management and Budget to issue guidance to agencies on the acquisition and use of AI systems. It also tasks the Office of Personnel Management with assessing federal AI workforce needs.

But the Administration is way overdue in complying with this law. OMB is now more than two years behind schedule in issuing guidance to agencies. And OPM is more than a year overdue in determining how many federal employees have AI skills – and how many need to be hired or trained up.

The Administration’s failure to comply with these statutory mandates was called out in a lengthy white paper issued by a Stanford University AI institute. The paper authors also found that many agencies had not posted the required AI use case inventories. Others had omitted key use cases — including DHS omitting an important facial recognition system. The Stanford paper summed up the Administration’s noncompliance with various mandates by concluding: “America’s AI innovation ecosystem is threatened by weak and inconsistent implementation of these legal requirements.”

Most of the AI policy debate is focused on how the federal government should police the use of AI by the private sector. But the executive branch can’t lose focus from getting its own house in order. It needs to appropriately manage its own use of AI systems – consistent with the law.

This subcommittee will keep insisting the Administration carry out laws designed to safeguard government use of AI.

And I’m developing further legislation to ensure federal agencies employ AI systems effectively, safely and transparently.

I expect this hearing will help inform these efforts.

With that, I yield to the Ranking Member of the Subcommittee, Mr. Connolly.