Hearing Wrap Up: Action Needed to Combat Proliferation of Harmful Deepfakes

WASHINGTON—The Subcommittee on Cybersecurity, Information Technology, and Government Innovation today held a hearing titled, “Advances in Deepfake Technology.” Subcommittee members discussed with subject matter experts how deepfake technology can be used by bad actors and hostile nations to distort reality and create harmful images. They also explored ways to authenticate and identify artificial images. Subcommittee members also discussed potential legislative options to protect against the harm that deepfake images can cause.

Key Takeaways:

Advances in the development and distribution of deepfakes will make it increasingly difficult to discern real images and video from artificial ones. This could lead to a crisis of confidence in the public as to whether what they see and hear in cyberspace is real. The authentication of content provenance by image creators can help maintain public confidence.

- Mounir Ibrahim, Vice President of Public Affairs and Impact at Truepic, highlighted the importance of establishing protocols in relation to deepfakes: “In my opinion, this is one of the greatest challenges we face today as synthetic and AI-generated content proliferates at a rapid rate. Some estimates are that in 1-2 years, 90% of new digital content created online will be wholly or partially synthetic. Without wide adoption of interoperable standards to clearly differentiate authentic content, AI-assisted, and fully generated content, our entire informational ecosystem will be at risk.”

Women and children are most likely to be victimized by the creation and dissemination of deepfake pornography. Legislative action may be necessary to combat this scourge and protect innocent victims from harm and humiliation.

- Dr. David Doermann, Interim Chair of Computer Science and Engineering at the State University of New York at Buffalo, discussed the need for proactive action to fight against deepfakes: “Deepfake technology offers innovative possibilities but severely threatens our society. We must act proactively to address these challenges and mitigate potential harm. I look forward to working with Congress and relevant stakeholders to find practical, balanced solutions that protect our digital landscape and uphold the values of transparency and trust.”

- Sam Gregory, Executive Director at Witness, spoke on the size and scope of the threats women face from deepfakes: “Non-consensual sexual deepfake images and videos are currently used to target private citizens and public figures, particularly women. The scale of this is growing: a recent analysis estimated that even based on public site-sharing of these videos (rather than videos posted on social media, those shared privately, or manipulated photos) by the end of this year, more videos will have been produced in 2023 than the total number of every other year combined.”

Member Highlights:

Subcommittee Chairwoman Rep. Nancy Mace (R-S.C.) asked witnesses what can be done to protect women and children from being abused by the circulation of deepfake images.

Rep. Mace: “It’s alarming to me how easy it has become to create and distribute realistic pornographic images of actual women and girls. These images can cause lifelong and lasting humiliation and harm to that woman or girl. What can we do to protect women and girls from being abused in this manner?”

Mr. Ibrahim: “This is, as everyone noted, the main issue right now with generative AI and there are no immediate silver bullets. Several colleagues have noted media literacy and education and awareness. Also, a lot of these non-consensual pornographic images are made from open-source models. There are ways in which open-source models can potentially leverage things like prominence and watermarking so that the outputs of those models will have those marks and law. Enforcement can better detect and take down those images.”

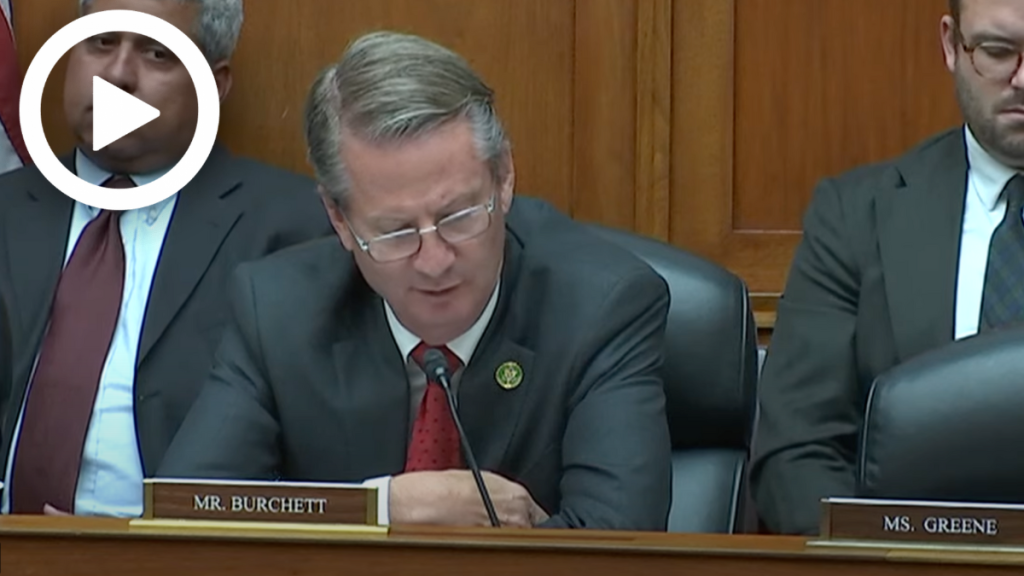

Rep. Tim Burchett (R-Tenn.) asked how harm to children from new deepfake technology can be combated.

Rep. Burchett: “How can we prevent this technology from being used to create child sex abuse material?”

Dr. Doermann: “I’ll just emphasize from the technology point of view, this is a genie that is out of the bottle. We’re not going to put it back in. These types of technologies are out there, they could use it on consenting adults the same way and its going to be difficult to legislate those, but the technology is there and it’s on the laptops for anyone who wants to download it from the internet. The legislation part is going to be the best approach.”

Rep. Eric Burlison (R-Mo.) asked experts what recommendations they have to better detect and authenticate synthetic images.

Rep. Burlison: “Can we talk about the technology that is possibly available to recognize or identify AI and how that might be applied in a commercial setting?”

Mr. Doermann: “The biggest challenge of any of these technologies is the scale. Even if you have an algorithm that works at 99.9 percent, the amount of data that we have at scale that we run through our major service providers makes the false alarm rates almost prohibitive. We need to have a business model for our service providers and our content providers that makes them want to take these things down. If they get clicks that is what’s selling ads now.