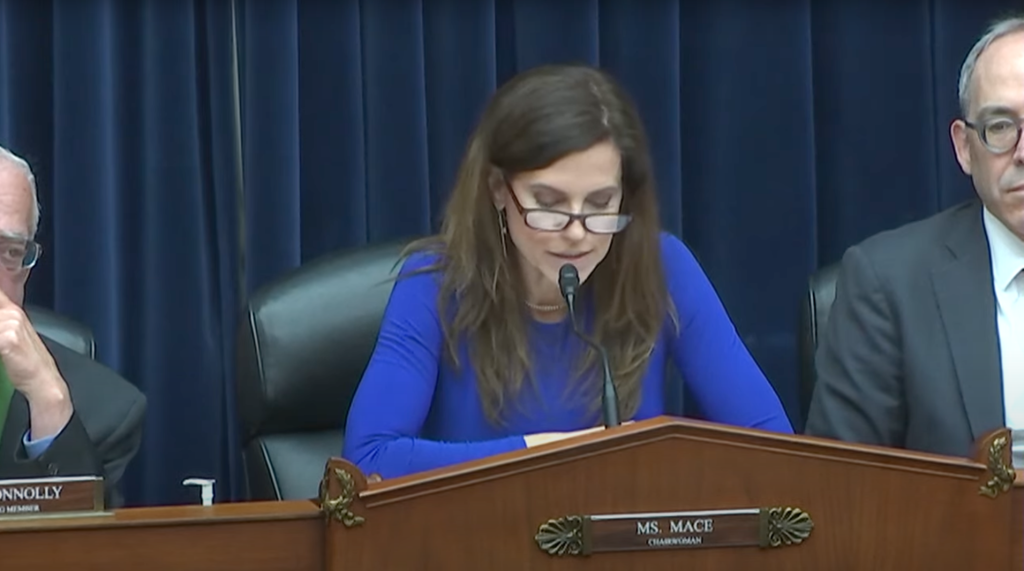

Mace Opens Second Hearing on White House Overreach on AI

WASHINGTON – Subcommittee on Cybersecurity, Information Technology, and Government Innovation Chairwoman Nancy Mace (R-S.C.) delivered opening remarks at a subcommittee hearing titled “White House Overreach on AI.”In her opening statement, Subcommittee Chairwoman Mace emphasized how new laws could smother AI advancements and delay the onset of life-enhancing and life-saving discoveries. She also expressed her concern over the Biden Administration Executive Order’s (EO) invocation of the Defense Production Act which mandates AI developers inform the government if they are even considering building novel AI systems, setting a dangerous precedent moving into the future.

Below are Subcommittee Chairwoman Mace’s remarks as prepared for delivery.

Good morning and welcome to this hearing.

Last October 30th, the White House released a monumentally lengthy executive order on artificial intelligence—the longest EO ever issued.

And the EO isn’t just long. It’s broad: It corrals dozens of federal agencies into a massive posse that is to go out and ride herd on every aspect of this emerging technology.

But why the stampede? We’re only just starting to grasp how AI can help and harm humanity. That’s why Congress is moving cautiously in this space. We already have a plethora of laws to which AI uses are subject, ranging from antidiscrimination to consumer protection statutes.

And unnecessary new laws could stifle AI innovation, slowing the arrival of life-enhancing and life-saving breakthroughs.

That’s why Congress is proceeding with a measured “first do no harm” approach.

Where AI applications are not captured under an existing law, we need to close those loopholes. That’s why I introduced a bill recently to ensure the distribution of nonconsensual pornography is not immune from criminal prosecution just because it’s been altered via an AI deepfake process.

But Congress wisely hasn’t authorized the Administration to go out and regulate AI differently than other technologies.

But this Executive Order does so anyway. It invokes the emergency powers of the Defense Production Act or DPA to require AI developers to notify the government if they are even considering developing powerful new AI systems. It also mandates they regularly hand over sensitive, proprietary data like testing results to the Commerce Department.

What does this have to do with defense production? The DPA gives the President extraordinary powers to ensure the supply of critical goods in time of war or national emergency.

But we’re not at war. And if artificial intelligence is an emergency, it’s not a temporary one—AI is not going away anytime soon. So, the new executive powers this EO asserts have no logical sunset.

This new reporting regime “lacks legal authority” according to the attorneys general of twenty states—including my own state of South Carolina. These AGs last month wrote a letter to the Commerce Secretary stating that the DPA “allows for the federal government to promote and prioritize production, not to gatekeep and regulate emerging technologies.”

What’s more, the gatekeeping in this E.O. is more likely to harm than to help our national defense.

What is the biggest national security concern around AI? It’s the risk that we relinquish our current lead in AI to China. That could have catastrophic implications for our military preparedness.

But requiring potential new AI developers to share their business plans and sensitive data with the government could scare away would-be innovators and impede more ChatGPT-type breakthroughs.

To be clear, the government does need to be proactive with respect to artificial intelligence. The Executive Branch needs to harness AI to strengthen national defense, bolster homeland security and improve the administration of benefit programs.

That’s why I’m glad that the EO contains numerous provisions to strengthen the government’s own AI workforce and enhance the government’s ability to contract with private sector AI providers.

But as the rubber hits the road on this EO—with implementation deadlines already having begun to kick in—I look forward to hearing from our panelists about where they believe it exceeds the President’s legitimate authority and where it could impede American innovation.

With that, I will now yield to Ranking Member Connolly for his opening statement.

Read More:

Hearing Wrap Up: U.S. Must Not Stifle AI Innovation in Establishing Guardrails for Use